When Sampling Beats Training: Multi-Turn RL's Cost-Benefit Problem

Part 1 of a series on practical post-training pipelines for deployed agents.

When you deploy agents in enterprise environments, compute spend shows up as time. Training runs take hours, sometimes days. Inference takes seconds per turn, multiplied across users and retries. You have to decide where you want to pay.

This series is a set of practical posts on how I think about that tradeoff. Each post uses a real benchmark or use case and a concrete pipeline, with code, datasets, and checkpoints.

I’ll start with Tau2-bench, a multi-turn tool-use benchmark where an agent guides a user through 20+ turns of diagnostics before solving a specific issue. Credit assignment is what breaks. The task reward only arrives at the final step.

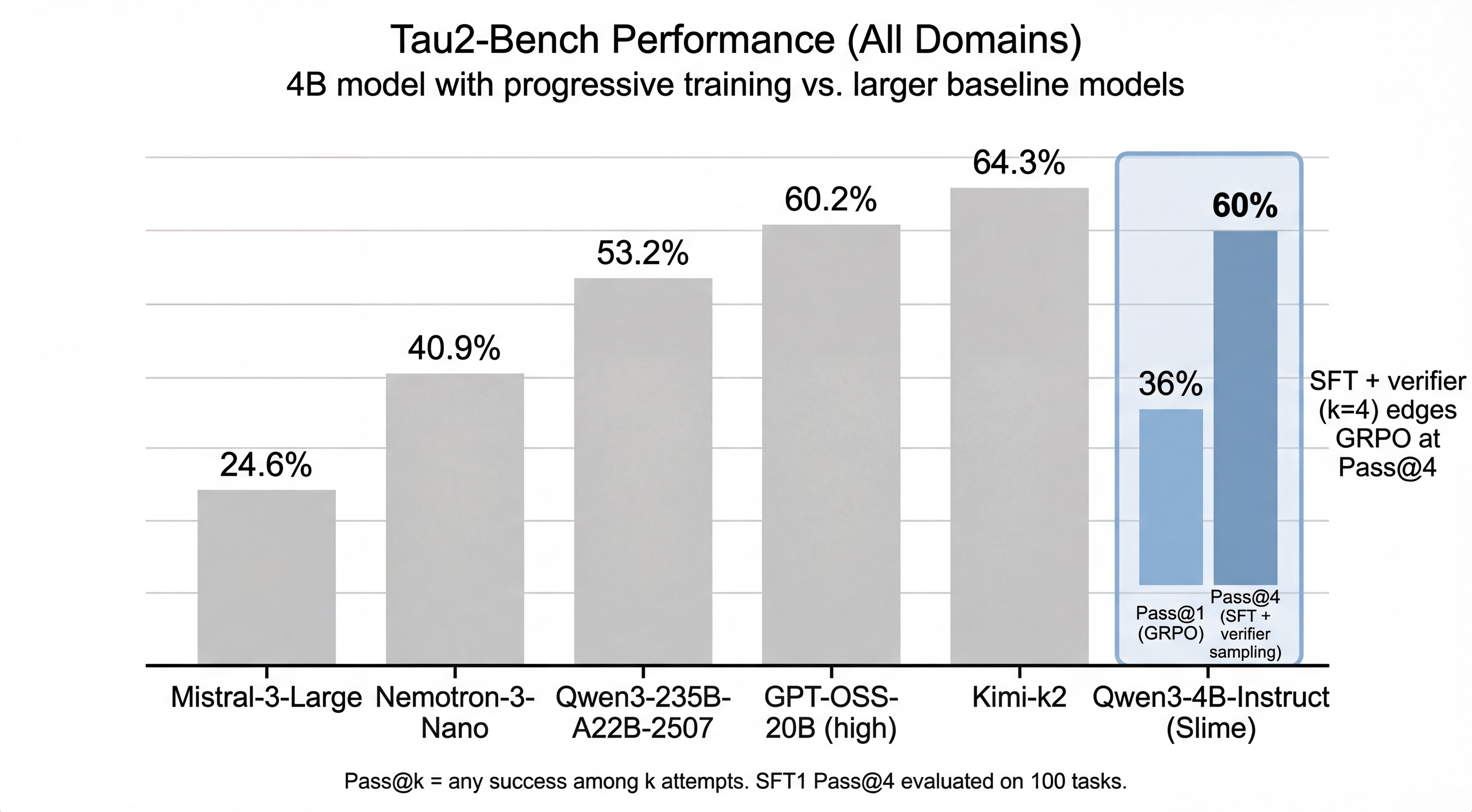

TL;DR: SFT1 (SFT+RFT) plus a verifier at inference time hit 60% Pass@4 on the test split. In my runs, GRPO ranged from 55% to 59% Pass@4 due to variance from the user simulator. GRPO still improved Pass@1. If you can afford sampling at deployment, that changes the cost-benefit case for RL.

I also trained Qwen3-4B using SFT → rejection fine-tuning (RFT) → GRPO. One representative run reached 57.1% Pass@4, and the best run reached 59% Pass@4. I walk through the recipe below, plus what changed my mind about multi-turn RL. For tasks with reliable verifiers, most of the improvement comes from sample selection and Pass@k under sampling.

I ran a Pass@4 ablation on the SFT1 checkpoint (the checkpoint before GRPO). With the same sampling setup, it reached 60% Pass@4. It slightly exceeded GRPO in that run.

Tau2-bench performance (all domains). The highlighted slot shows Pass@1 for GRPO and Pass@4 for SFT1 plus verifier-based sampling (k=4).

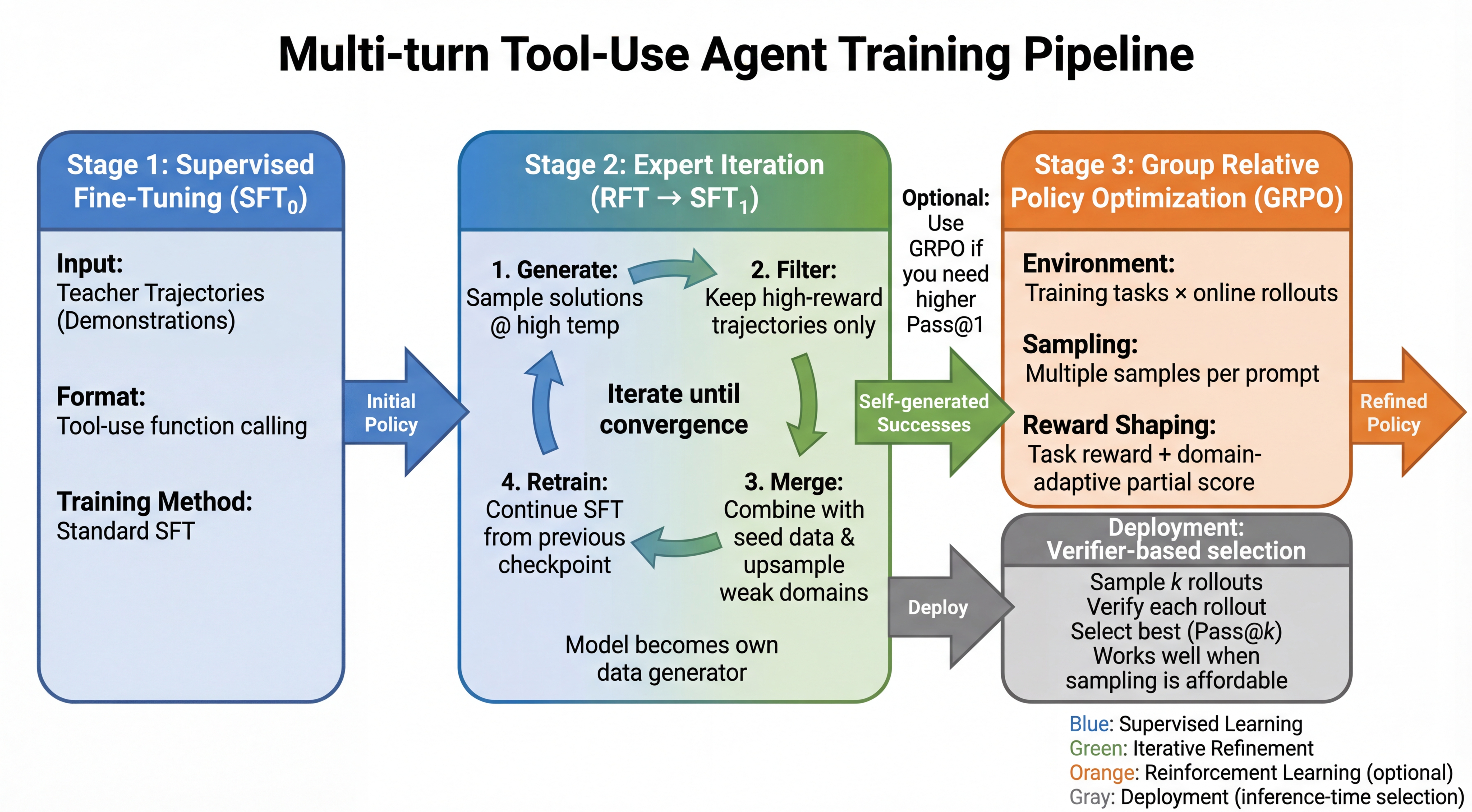

Training and deployment pipeline for multi-turn tool-use agents. GRPO is optional. Verifier-based selection is the deployment path when sampling is affordable.

Links: training data, SFT1 checkpoint, GRPO checkpoint, and code.

Notes.

Pass@khere means “any success among k attempts” (not thepass^kleaderboard estimate). Rough compute budget: training ~2 hours on 8×H100; evaluation for Pass@4 ~2 hours on 2×H100.

Where the Work Is

In these pipelines, most of the effort goes into the SFT data. If the model cannot follow the protocol, it cannot generate useful rollouts, and neither RFT nor GRPO has much to optimize. I treat the baseline as a model that can follow the protocol plus a verifier that can score rollouts in a way you trust.

For tau2-bench, good SFT data needs to teach basics that are easy to gloss over:

- Valid tool calls and arguments across 30+ tools

- Turn discipline (one action, then wait for the observation)

- Dual-control language (coach the user, do not pretend you can click buttons)

- Recovery behavior when something fails or is ambiguous

RFT is a practical middle step. You sample, score with a verifier, and train on the trajectories that worked. The RAFT paper shows how far this can go with verifiable rewards, and how much of the perceived gap to GRPO comes down to sample selection (for example, avoiding prompts where every sampled rollout is wrong).

The same paper also shows a limitation of positive-only selection. Entropy collapses fast. Early gains come quickly, then exploration dries up. In practice, that shows up as a bigger difference between Pass@1 and Pass@k. RL can still help when you care about Pass@1, or when sampling is expensive.

Context: Credit Assignment in Multi-Turn Tool-Use

In a telecom troubleshooting run, the agent might ask the user to grant app permissions at step 15, a critical action. But the task reward only arrives at the final step.

How does the model learn that step 15 mattered?

Standard outcome-based rewards (0/1) provide essentially zero gradient across intermediate steps. The model sees no signal until task completion. For complex tool-use, this breaks training.

How Do We Do Multi-Turn RL?

Stage 1: SFT (Teaching Protocol)

Before a model can optimize tool-use, it needs the basics: one action per turn, then wait. Thirty-plus tools with complex argument structures. And in telecom, a constraint that trips up most systems - the agent coaches users through diagnostics rather than executing them directly.

Without SFT, RL training will struggle to find any learning signal. In our setup, SFT mainly teaches the protocol (valid tool calls, turn-taking, and dual-control). On its own, it underperformed the baseline (8.6% vs 14.3%), but it produced a model that could generate usable rollouts for filtering and RL.

In our runs, pure SFT was not enough. The real gains came once we started filtering for successful trajectories (Stage 2).

Stage 2: Rejection Fine-Tuning (RFT)

After SFT, the model can complete tasks but does so inconsistently. Sampling multiple rollouts and keeping only successes concentrates the training distribution on viable strategies. The filtering is simple: sample 4-8 attempts at temperature 0.8, keep trajectories that succeed (reward >= 1.0), and for tasks with no successes, keep the highest partial score if it clears 0.6.

Recent work on RAFT shows that RFT-style training (rejection sampling on verifier rewards) can approach GRPO performance with faster early-stage learning, and that a large part of the gap comes down to sample selection and exploration dynamics (e.g., filtering prompts where all sampled responses are wrong). Combined with our observation that GRPO’s gains are much larger at Pass@4 than at greedy Pass@1, this raises a practical question: if you have a verifier at deployment, is RL worth the training cost?

The published tau2-sft-seed-v3 dataset results from this filtering.

Stage 3: GRPO + Turn-Level Reward Shaping

GRPO solves credit assignment through two mechanisms:

Group-based advantage estimation: For each prompt, sample K trajectories, score them, and train the model to increase probability of high-reward actions relative to the group average. The model learns “this action was better than my other attempts” rather than “this action is objectively good.”

Dense reward shaping: Tau2-bench provides turn-level evaluation (action checks, communication checks, environment assertions). We extract partial scores and shape rewards:

shaped_reward = task_reward + alpha * partial_score

This provides gradient at every turn, not only at task completion.

Results

| Stage | Overall | Airline | Retail | Telecom |

|---|---|---|---|---|

| Baseline (Qwen3-4B-Instruct) | 14.3% | 5.0% | 16.0% | 20.0% |

| SFT | 8.6% | 5.0% | 20.0% | 0.0% |

| SFT1 | 27.0% | 20.0% | 50.0% | 7.5% |

| GRPO (Pass@1, greedy) | 32.9% | 15.0% | 76.0% | 4.0% |

| GRPO (Pass@4) | 57.1% | 50.0% | 76.0% | 44.0% |

| SFT1 + verifier (Pass@4) | 60.0% | 30.0% | 82.5% | 52.5% |

All rows are greedy (Pass@1) unless otherwise noted. Pass@4 uses sampling with a verifier.

Ablation: SFT1 with Test-Time Selection Edges Out GRPO at Pass@4

I ran the ablation I left open earlier. I evaluated the SFT1 checkpoint with the same sampling setup used for Pass@4. Pass@1 here means the first sampled attempt. Pass@4 means at least one success among 4 attempts. These Pass@1 numbers are sampled, so they will not match the greedy Pass@1 results above.

SFT1 vs GRPO (same sampling setup)

| Model | Pass@1 | Pass@4 |

|---|---|---|

| SFT1 | 29% | 60% |

| GRPO | 36% | 59% |

GRPO still helps Pass@1. At Pass@4, SFT1 slightly exceeded it in this run. If you can afford 4 attempts with a verifier, a strong SFT1 checkpoint plus test-time selection can match RL without running RL.

Eval config

Eval command hyperparameters:

--domains airline,retail,telecom--task-split test--num-samples 4--temperature 0.8--top-p 1.0--top-k 20

Environment variables:

TAU2_USE_COMPRESSED_PROMPTS=0TAU2_USER_MODEL=gpt-4.1-miniTAU2_USER_TEMPERATURE=0.7

Policy server (sglang):

--model-path Jarrodbarnes/Qwen3-4B-tau2-sft1(for SFT1 eval) orJarrodbarnes/Qwen3-4B-tau2-grpo-v1(for GRPO eval)--tp 1--mem-fraction-static 0.70--port 30000

What Didn’t Work

Pure SFT made things worse. Training on unfiltered trajectories dropped accuracy from 14.3% (baseline) to 8.6%. The model learned to imitate the format of tool calls without learning when to use them.

Telecom is still the hardest domain. Retail reaches 76% while telecom stays at 44%. When the agent must instruct users through physical actions rather than execute them directly, error propagation compounds across turns.

Sparse rewards break credit assignment. With 20+ turn episodes and binary outcome rewards, early actions receive near-zero gradient. Turn-level partial scores were necessary to make training converge.

Implementation Notes

Dual-control (telecom): Diagnostic actions are user-only. The agent instructs rather than executes:

Agent: "Please toggle airplane mode ON, wait 10 seconds, then OFF."

User: "Done. Still no data."

Function calling: Qwen3 uses <tool_call>{...}</tool_call>. Include </tool_call> in stop sequences.

User simulator: Training uses a local instruct model on port 30001. Evaluation uses GPT-4.1-mini.

Resources

- Code: github.com/jbarnes850/Tau2-RL-Pipeline

- Checkpoints: Qwen3-4B-tau2-sft1 (SFT1), Qwen3-4B-tau2-grpo-v1 (GRPO)

- Dataset: tau2-sft-seed-v3

- Benchmarks: tau2-bench

The question I started with - where should compute go? - has a messier answer than I expected. If you have a verifier at deployment, sampling buys you a lot. GRPO still helps Pass@1, but the gap narrows. The honest answer is: it depends on what you can afford at inference time.